Code coverage stats - are they helpful?

Code coverage - how many code lines and branches are accessed during a test - is easy to measure in many languages and test frameworks. And at first glance, measuring code coverage seems like a no-brainer. After all, who wouldn't want to make sure their code is sufficiently tested?

But just because a metric is easy to measure, doesn't necessarily mean it is helpful.

Why code coverage can be misleading

Code coverage tools will measure which lines (and branches) are accessed during a test, but code coverage measurements don't tell you the value of those tests.

Assertion quality

One of the limitations of code coverage tools is they only measure what code is accessed during a test, not that the test verified the code works correctly.

For example, I can write a test that covers a lot of code but has minimal verification. In this case, I have a method that returns a list of the slowest test cases in a given test run. But my test only verifies that the returned list of slow tests is not empty:

1@Test

2fun `should fetch slowest test cases`() {

3 val publicId = randomPublicId()

4 val limit = 5

5

6 val testCaseDatabaseRepository = TestCaseDatabaseRepository(dslContext)

7

8 val slowTestCases = runBlocking { testCaseDatabaseRepository.fetchSlowTestCases(publicId, limit) }

9

10 expectThat(slowTestCases).isNotEmpty()

11}

This test doesn't verify that the code is returning the correct values, so it will pass in a whole variety of situations where the underlying code is incorrect.

Also, that test will have the same code coverage as a more valuable test that verifies the correct values are returned:

1@Test

2fun `should fetch slowest test cases`() {

3 val publicId = randomPublicId()

4 val limit = 5

5

6 val testCaseDatabaseRepository = TestCaseDatabaseRepository(dslContext)

7

8 val slowTestCases = runBlocking { testCaseDatabaseRepository.fetchSlowTestCases(publicId, limit) }

9

10 expectThat(slowTestCases)

11 .hasSize(5)

12 .map(TestCase::duration)

13 .containsExactly(

14 BigDecimal("25.000"),

15 BigDecimal("24.000"),

16 BigDecimal("23.000"),

17 BigDecimal("22.000"),

18 BigDecimal("21.000")

19 )

20}

Code coverage stats alone aren't enough here, we also need a person to look at the test assertions and verify they are thoroughly verifying the code is behaving correctly.

Treats all code equally

Another limitation of code coverage tools is that they treat all code with the same criticality. This isn't a knock on the tools themselves, these types of automated tools can't magically determine how critical a given area of code is. That requires a person.

Say I'm creating an online banking application. It will have a whole host of capabilities, ranging from the mundane (viewing a memo line on a deposited check) to the critical (depositing funds into an account).

I could write a test suite with 95% code coverage of this banking app but miss critical features in my tests - such as depositing money into the correct account. Relying solely on the code coverage metric isn't enough - again we need a person to look at what areas are tested to ensure the most valuable areas are thoroughly tested.

Ways code coverage can be helpful

While code coverage tools have limitations, there are ways these tools can help.

Finding code not covered by any tests

While code coverage tools alone can't tell you if individual tests are valuable, the tools are great at finding code that isn't covered by any test. By showing which parts of the code aren't accessed during a test, coverage tools can show you what areas of the test suite need augmenting.

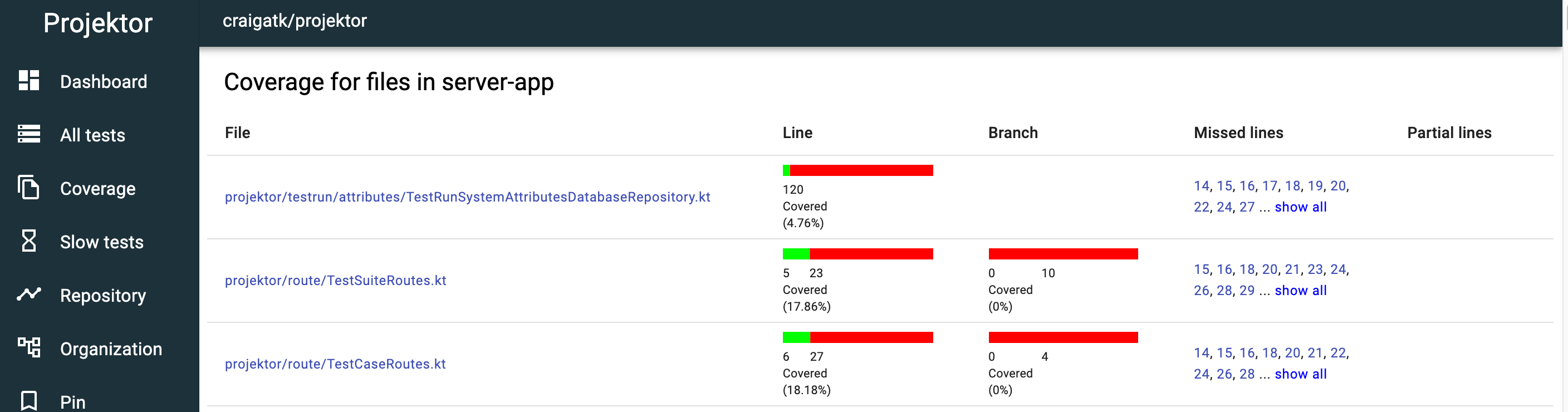

In the above example, almost all of the lines in those files aren't covered by tests. These areas of the codebase are prime candidates for adding additional tests.

And as you see above, in addition to identifying lines of code that aren't covered, code coverage tools can show you branches that aren't covered. These missing branches can indicate conditions or logic that isn't covered in the existing test suite.

Finding the coverage delta in a code change

With a bit of additional work, code coverage tools can also show you the difference in coverage between a giving code change and the main codebase. This difference in the code coverage percentage can be valuable when reviewing a code change.

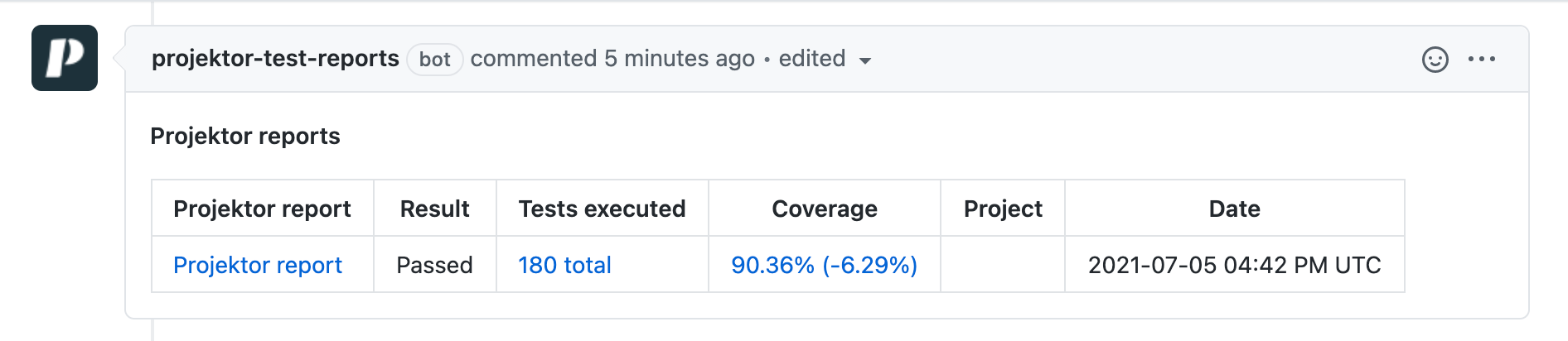

For example, one of the features I built in the Projektor test reporting tool was automatically calculating the change in code coverage from a pull request to the mainline branch and adding that as a comment to the pull request in GitHub:

In this example, the changes in the pull request decreased the code coverage by more than 6%. By putting this information directly on the pull request, it's easy for both the author of the changes and reviewers they need to dig into the changes to find what's happening - potentially significant amounts of untested code were added or tests were removed.

Conclusion

Remember what our goal is when writing tests - catching issues before they make their way to customers. Customers don't care if our test suite has high code coverage if important pieces of our software are broken.

But code coverage can help automate some of the mundane work of code change reviews, freeing up the reviewer to spend their mental energy on more important parts of the review - ensuring the code behaves correctly, looking for a more maintainable or performant implementation, etc.

When used together, code coverage stats plus thorough manual review can be a potent combination to help ensure code changes are optimal and thoroughly tested.

Resources

- Test Coverage - Martin Fowler: https://martinfowler.com/bliki/TestCoverage.html

- How to Misuse Code Coverage - Brian Marick: http://www.exampler.com/testing-com/writings/coverage.pdf

- What's a good code coverage to have? - Harm Pauw : https://www.scrum.org/resources/blog/whats-good-code-coverage-have