Faster test failure debugging with GenAI?

What are some ways we can use GenAI to make developers' lives easier?

A couple of years ago I had the chance to use GenAI to help build out a natural-language-to-query-language translator in a software observability system. This capability allowed users to ask a plain-English question such as "What are my slowest requests?" or "Show me my errors". Then using ChatGPT we generated the corresponding query in our system's query language. This allowed the user to quickly find what they were looking for without the user needing to be an expert in our system. As with all things GenAI, it wasn't perfect 100% of the time - but it was close enough to either give users the answers they needed or a great starting place to dive in further.

That GenAI project was a ton of fun to help build. After that, are there other places I could add GenAI capabilities in the software I build?

Fix failing tests faster

A while back I built a test result / code coverage / trend tracking tool called Projektor. The original goal of Projektor was to make it easier and quicker for developer's to find and fix failing tests in their build.

Would adding GenAI to Projektor help developers fix their tests even faster?

Test case failure analysis

As an experiment, I added test failure analysis with ChatGPT into Projektor. Then users could get hints as to the cause of their test failure by just clicking a button in the Projektor UI.

I defined an interface with a concrete implementation with ChatGPT to avoid coupling the Projektor code to a single GenAI implementation. Then later I could add support for additional AI providers and give users the flexibility in which provider they want to use:

1interface AITestFailureAnalyzer {

2 suspend fun analyzeTestFailure(testOutput: String): TestFailureAnalysis?

3}

Initially I added an implementation using ChatGPT as I was already familiar with its APIs.

I used the ChatGPT model gpt-4o-mini as it's pricing is 1/10 of the price of the gpt-4o model and gpt-4o-mini still yields extensive failure analysis output.

As far as the prompt, one if the nice things about GenAI is ease of use - I don't need to craft a complex prompt to analyze a test failure.

My prompt was simply: Why did this test fail? with the test failure output appended. That's it.

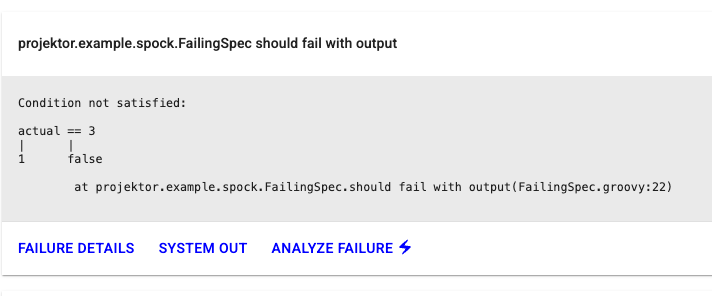

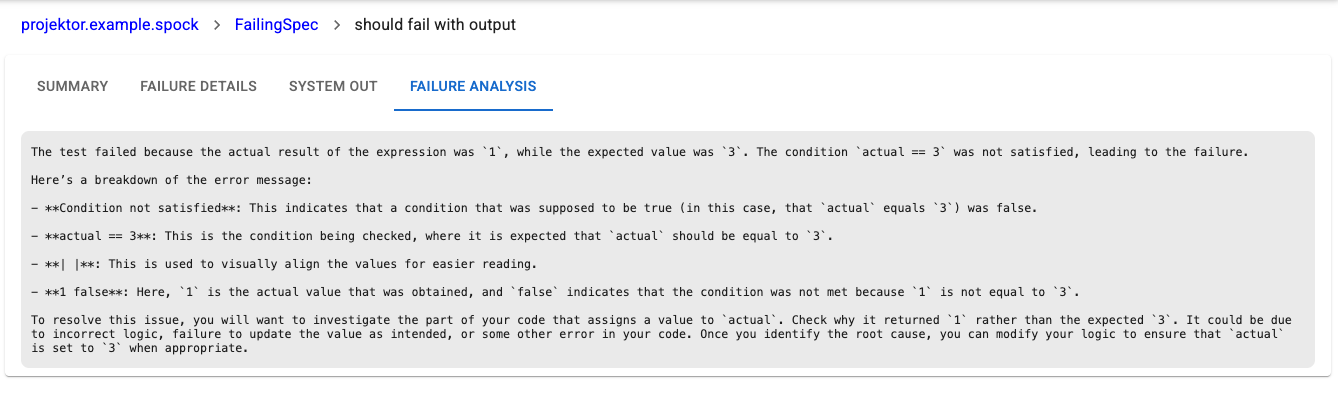

Then I added a way to trigger the test failure analysis from the Projektor UI:

And display the test failure analysis results to the user:

Test failing on application startup

As an example, let's look at this test failure of an application test case that's failing at app startup:

1com.zaxxer.hikari.pool.HikariPool$PoolInitializationException: Failed to initialize pool: Connection to localhost:5433 refused. Check that the hostname and port are correct and that the postmaster is accepting TCP/IP connections.

2 at app//com.zaxxer.hikari.pool.HikariPool.throwPoolInitializationException(HikariPool.java:606)

3 at app//com.zaxxer.hikari.pool.HikariPool.checkFailFast(HikariPool.java:593)

4 at app//com.zaxxer.hikari.pool.HikariPool.<init>(HikariPool.java:101)

5 at app//com.zaxxer.hikari.HikariDataSource.<init>(HikariDataSource.java:80)

6 at app//projektor.database.DataSourceConfig$Companion.createDataSource(DataSourceConfig.kt:44)

7 at app//projektor.ApplicationKt.main(Application.kt:89)

8 ...

Sending the full failure over to ChatGPT yields this analysis:

1The test failed due to a connection issue with the PostgreSQL database. The specific error message is:

2

3Connection to localhost:5433 refused. Check that the hostname and port are correct and that the postmaster is accepting TCP/IP connections.

4

5This indicates several potential problems:

6

71. **Database Not Running**: The PostgreSQL server might not be running on your local machine. Ensure that the PostgreSQL service is up and accepting connections.

8

92. **Incorrect Port**: The test is attempting to connect to PostgreSQL on port `5433`. Verify that PostgreSQL is configured to listen on this port. The default port for PostgreSQL is `5432`. You might want to check your PostgreSQL configuration file (`postgresql.conf`) to see if the port is set to `5433` and whether the server is correctly configured to listen on that port.

10

113. **Firewall/Network Issues**: If the PostgreSQL server is running, there might be firewall rules or network policies preventing access to the specified port. Make sure that your firewall settings allow connections on port `5433`.

12

134. **TCP/IP Settings**: Check the PostgreSQL configuration to see if it is set to allow TCP/IP connections. This is typically managed in the `postgresql.conf` file through the `listen_addresses` setting, and you may also need to check the `pg_hba.conf` file to ensure that the appropriate host and user permissions are set up for connections.

14

155. **Network Interface Configuration**: If you're running PostgreSQL in a containerized environment or a virtual machine, ensure that the network interface configurations allow for connections to `localhost` or the specific IP address you are trying to connect to.

16

176. **Connection String or Configuration Error**: Make sure that your application's connection string or configuration is set up correctly to point to the correct database instance, user, and password, in addition to the hostname and port.

18

197. **Postmaster Not Accepting Connections**: Finally, the error suggests checking if the "postmaster" (PostgreSQL server process) is accepting connections. This can be done by trying to connect to the database using a tool like `psql` or a database client, specifying the same hostname and port.

20

21In summary, start by ensuring that the PostgreSQL server is running properly and is configured to accept connections on the correct port, then check your application settings to confirm that everything is set up correctly.

The analysis points out some helpful places to start the investigation:

- Is the database running?

- Is the port correct? (it's running on a non-standard Postgres port)

- Are there network or access issues making the database unreachable from the application?

And the actual cause is the first one - I generated this test failure by shutting down the database before running the test.

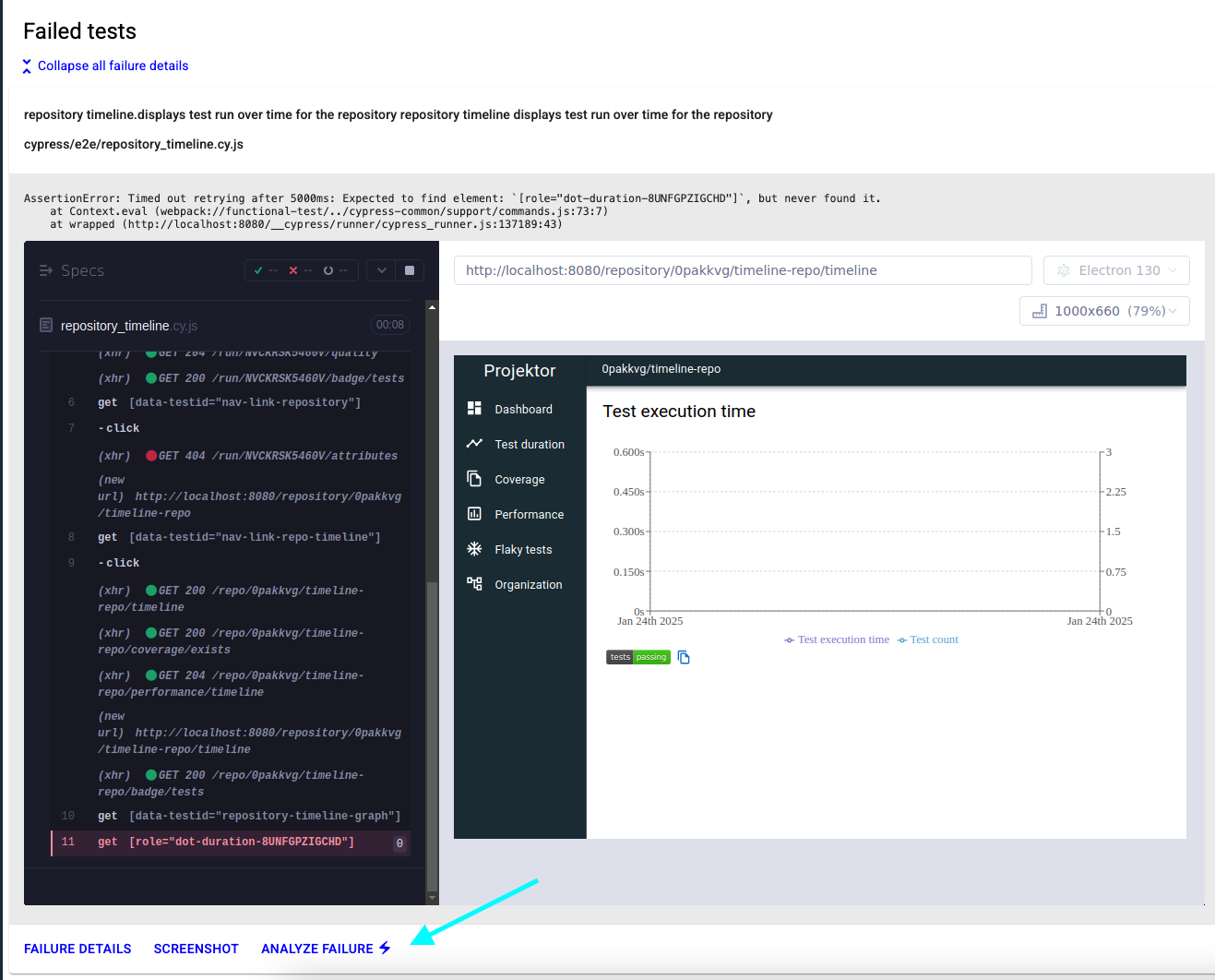

Failing Cypress browser test

This next failure is a Cypress browser test:

1AssertionError: Timed out retrying after 5000ms: Expected to find element: `[role="dot-duration-8UNFGPZIGCHD"]`, but never found it.

2 at Context.eval (webpack://functional-test/../cypress-common/support/commands.js:73:7)

3 at wrapped (http://localhost:8080/__cypress/runner/cypress_runner.js:137189:43)

Clicking the "Analyze Failure" button will analyze the test failure:

And yield an analysis such as this:

1The error message you're encountering indicates that a test in a Cypress-based test suite has failed due to a timeout when trying to find an element with the specified selector `[role="dot-duration-8UNFGPZIGCHD"]`. Here are some possible reasons why this test may have failed and some suggestions for troubleshooting:

2

31. **Element Not Present**:

4- The most likely reason is that the element with the role `dot-duration-8UNFGPZIGCHD` does not exist in the DOM at the time the test is executed. This could be due to changes in the application’s state that prevent the element from rendering.

5

62. **Timing Issue**:

7- The element may not be present yet because it takes longer than expected to load. This could be due to slow network conditions, slow server response, or heavy client-side processing. Consider increasing the timeout or using `cy.wait()` to pause the test before checking for the element.

8

93. **State of the Application**:

10- Ensure that the application is in the correct state to display the element you’re testing. If this element is dependent on prior actions or specific states, verify they have been completed successfully.

11

124. **Selector Issues**:

13- The selector `[role="dot-duration-8UNFGPZIGCHD"]` might be incorrect. Double-check the role attribute in the rendered application. If the role or any part of the selector is dynamic (changing on each render), your test may need to be updated accordingly.

14

155. **Visibility of Element**:

16- The element might be present but not visible. Make sure that it is not hidden by CSS or conditional rendering.

17

186. **Test Environment**:

19- Verify if the test is running in the correct environment and that all dependencies (like services the application relies on) are functioning.

20

217. **Error in the Application Code**:

22- There might be a bug in the application that is preventing the element from rendering. Check the application logs or run the application manually to ensure there are no runtime errors.

23

24### Suggestions for Resolution

25

26- **Debugging**: Use `cy.debug()` or `cy.log()` to output useful information just before the assertion to understand the current state of the application and what’s in the DOM.

27

28- **Increase Timeout**: If timing is an issue, consider increasing the default timeout for finding the element:

29javascript

30cy.get('[role="dot-duration-8UNFGPZIGCHD"]', { timeout: 10000 }).should('exist');

31

32

33- **Add Waiting Mechanisms**: If there are asynchronous operations that load the element, consider using appropriate commands or waiting strategies:

34javascript

35cy.wait(2000); // For demonstration, not a best practice. Prefer to wait for specific conditions.

36

37

38By investigating these areas, you should be able to identify why the test failed and take the necessary steps to correct it.

Again, while GenAI can't immediately solve all of your failing tests for you, it can help with ideas on next steps.

This analysis yields some helpful hints, such as:

- Recommending adding

cy.debug()orcy.log()to get more detailed information about the failure - Recommended adding a longer timeout for the specific element to appear

- Recommended adding a wait, but also called out a static wait time isn't a great idea - wait for a condition instead

- And more!

Not panacea

The above examples were non-trivial failures with a decent amount of context in the failure messages. Analysis with ChatGPT offers hints and ideas on those scenarios, but it isn't going to magically solve test failures with minimal context.

For example, when analyzing this test failure:

1Condition not satisfied:

2

3actual == 3

4| |

51 false

6

7 at projektor.example.spock.FailingSpec.should fail with output(FailingSpec.groovy:22)

ChatGPT tries to offer ideas, but realistically there isn't enough here in the failure message for anyone - human or machine - to quickly find and fix the cause:

1The error message you provided indicates that a test case in a Spock specification failed because the actual value did not match the expected value. Let's break down the message:

2

3- **Condition not satisfied**: This indicates that an assertion in your test has failed.

4- **actual == 3**: This is the assertion that is failing. The test expected the actual value to be `3`.

5- **| |**: This part of the message shows the comparison. The left side is your actual value, and the right side is the expected value.

6- **1 false**: Here, `1` is the value that was evaluated in the test (this is what `actual` is), and `false` indicates that this does not satisfy the condition that it should be equal to `3`.

7- **at projektor.example.spock.FailingSpec.should fail with output(FailingSpec.groovy:22)**: This part shows where the failure occurred, specifically in `FailingSpec.groovy` at line 22.

8

9### Possible Reasons for Failure:

10

111. **Wrong Value Calculation**: The actual value `1` might be coming from some calculations or method calls that are not returning what you expect. You might want to check the logic that produces this value.

122. **Test Setup Issues**: Ensure that any setup or stubbed data used in the test is correct. Misconfiguration in the setup, such as incorrect mock responses, could lead to unexpected actual values.

133. **State Issues**: If the test relies on shared state or if some dependencies are not reset properly between test runs, this could lead to unexpected values.

144. **Logic Errors**: If the code being tested contains bugs, this could cause it to return incorrect results.

15

16### Steps to Diagnose the Issue:

17

181. **Review the Test**: Look closely at the test case to understand how the actual value `1` is being generated.

192. **Debugging**: Use debugging tools to step through the code that generates the actual output. This may help identify why the output is not as expected.

203. **Print Statements**: Add logging or print statements in the code to identify the flow of data to understand where things are going wrong.

214. **Check Expected Value**: Ensure that `3` is indeed the expected value, and evaluate if the conditions that lead to it should be updated.

22

23By investigating these areas, you should be able to identify the cause of the test failure and address it accordingly.

Though ChatGPT does still offer some basic test debugging hints - such as reviewing the actual and expected values are correct, stepping through the code, etc. Adding more context to this failure in the test code could help both the humans and the robots debug this test failure more easily.

Conclusion

Analyzing test failures with GenAI tools such as ChatGPT can help give developers hints and ideas on next steps to debug failing tests. However, GenAI isn't magic - it can't solve all test failures, especially ones with minimal context. But GenAI can help jump-start investigations and hopefully help developers solve their test failures a bit more quickly.